I’ve been testing the Clever AI Humanizer tool to rewrite AI content so it sounds more natural and less detectable, but my results are mixed. Sometimes it improves flow and tone, other times it feels off or still looks obviously AI generated. Can anyone share tips, settings, or workflows that make this tool work better for long-form content and SEO, or suggest better alternatives that keep text human-like without hurting rankings?

Clever AI Humanizer: My Actual Experience & Test Results

I’ve been going through a bunch of AI “humanizer” tools lately, starting with the free ones, and Clever AI Humanizer is the one I’ve spent the most time hammering on.

Stuff in this niche changes all the time. Tools get pushed, patched, re-launched, and then quietly die when they stop working with new detectors. So I wanted to see where Clever AI Humanizer stands right now, not based on some months-old blog post.

The actual Clever AI Humanizer link (because yes, there are fakes)

The real site is:

That is the only legit Clever AI Humanizer I’ve found.

Reason I’m spelling this out: I’ve had people message me asking for the “real” URL after landing on random clones from Google Ads. Some of those copycat tools are piggybacking off the name, then hitting you with subscriptions, fake “premium” tiers, etc.

As far as I’ve seen:

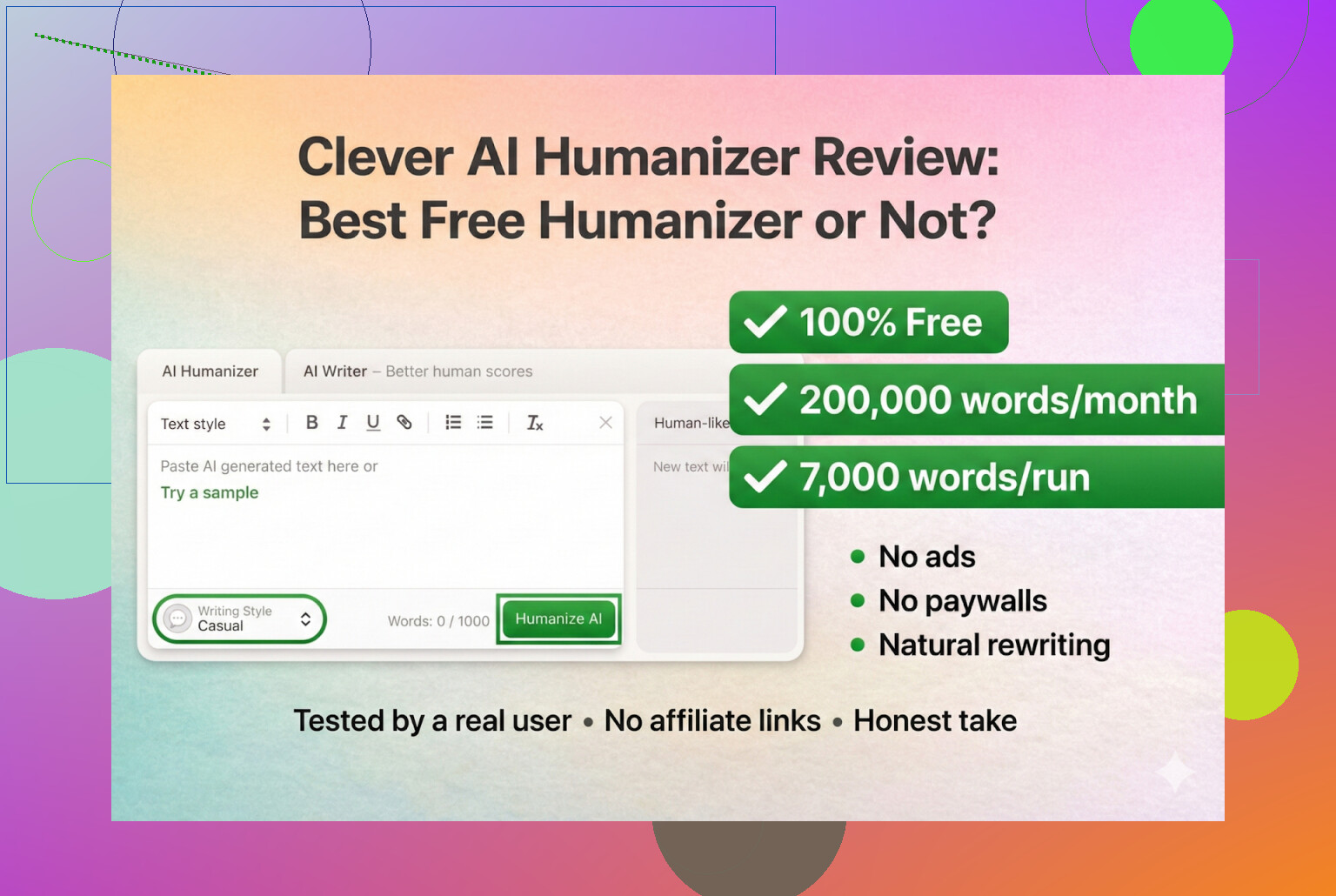

- Clever AI Humanizer has no paid plans

- No upsells, no hidden “unlock full mode” stuff

- If someone is asking you for money under that brand, you’re almost definitely not on the right site

So yeah, double-check the address bar.

How I tested it

I didn’t want to bias the test by writing my own draft, so I went full AI-on-AI:

- Used ChatGPT 5.2 to generate a 100% AI-written article about Clever AI Humanizer.

- Took that raw AI text and dropped it into Clever AI Humanizer.

- Ran the result through multiple AI detectors and then back through ChatGPT to judge quality.

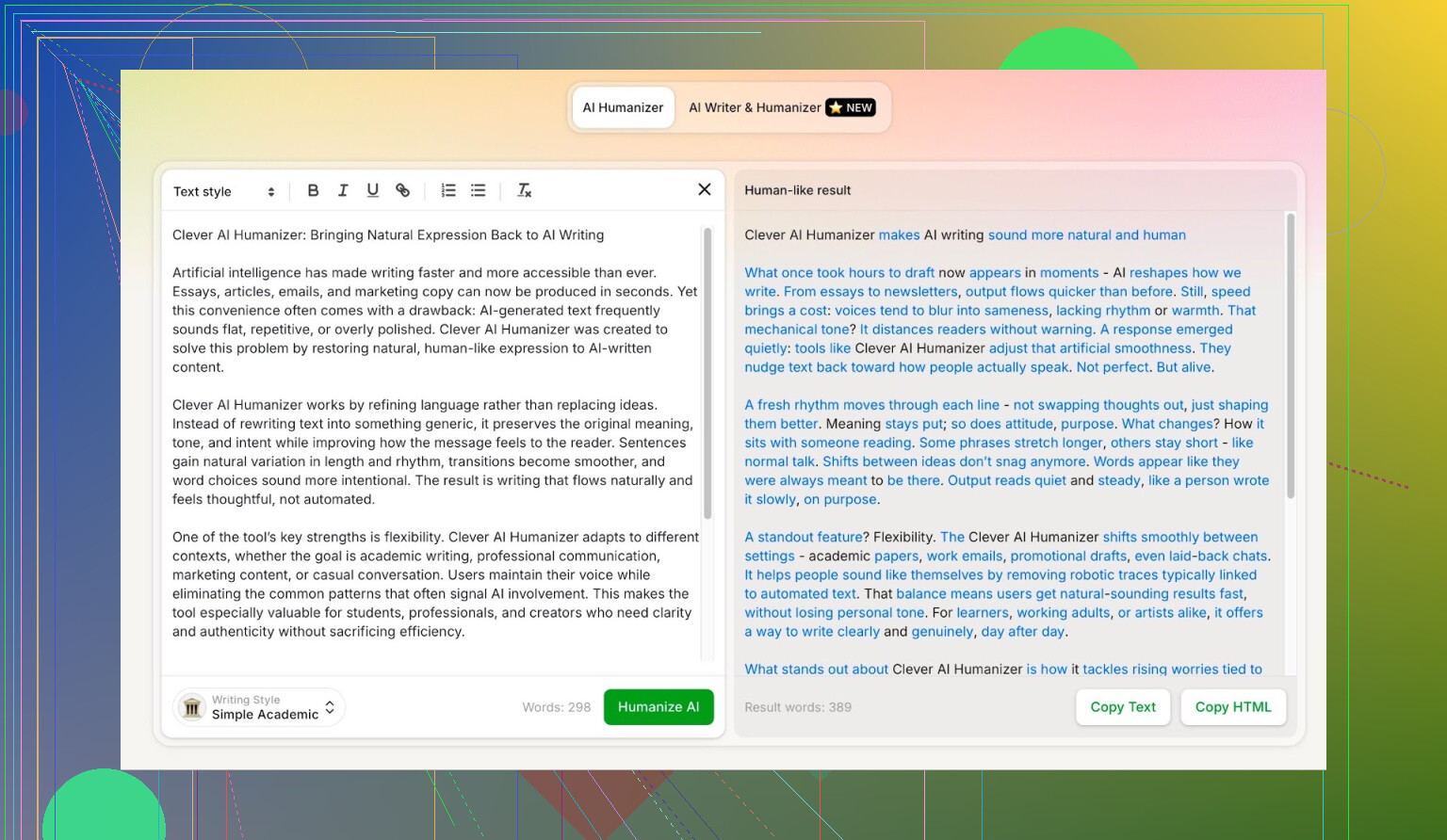

For the first round, inside Clever AI Humanizer I picked the Simple Academic style.

That option is interesting because:

- It uses light academic phrasing, but not full-on scholarly writing.

- This kind of “in-between” style tends to be tricky for a lot of detectors.

- It’s also one of the most unforgiving modes for humanizers, since it needs to sound structured without being robotic.

My guess is that this half-formal style helps it dodge some detector patterns while still feeling normal to a human reader.

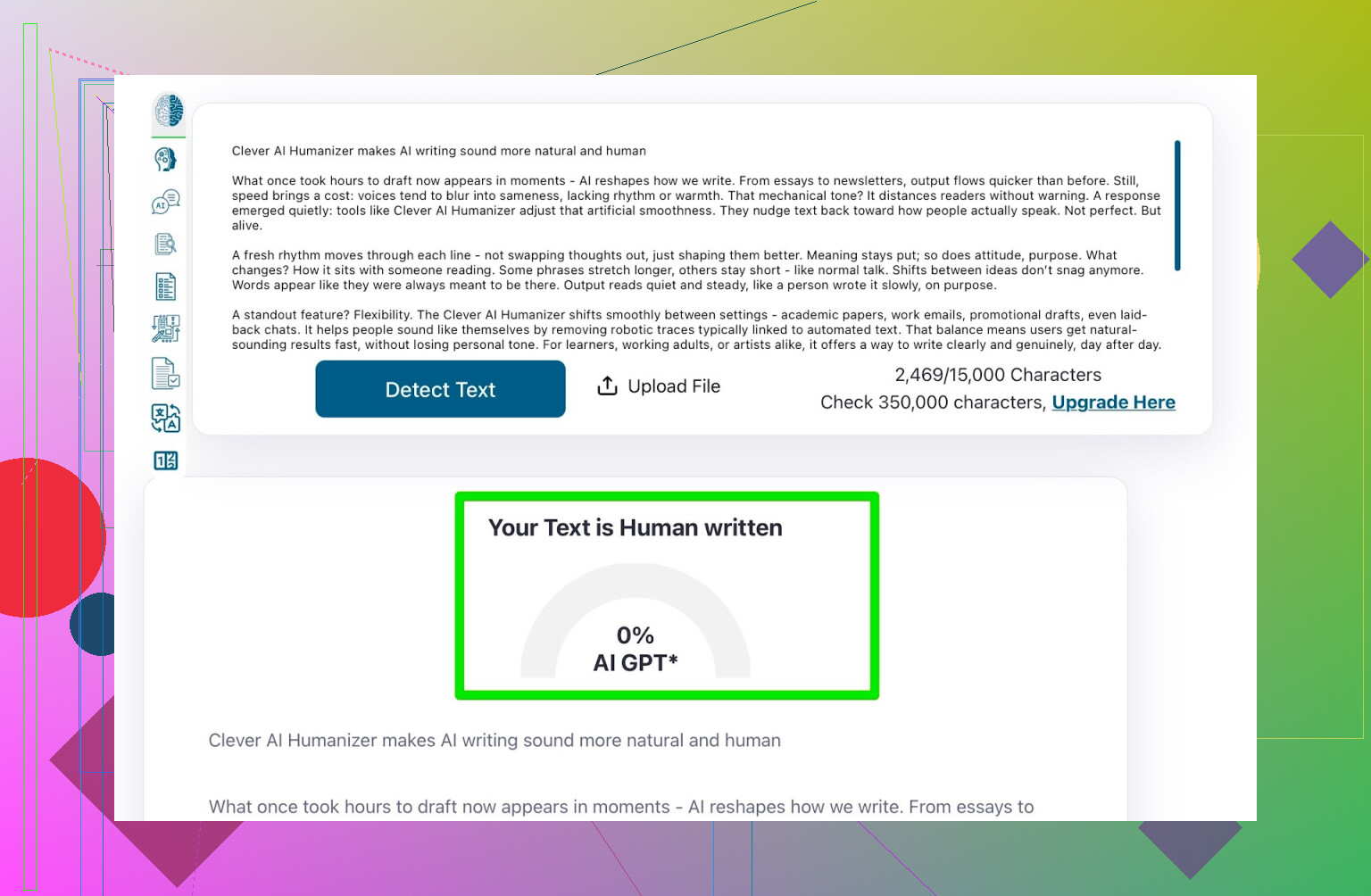

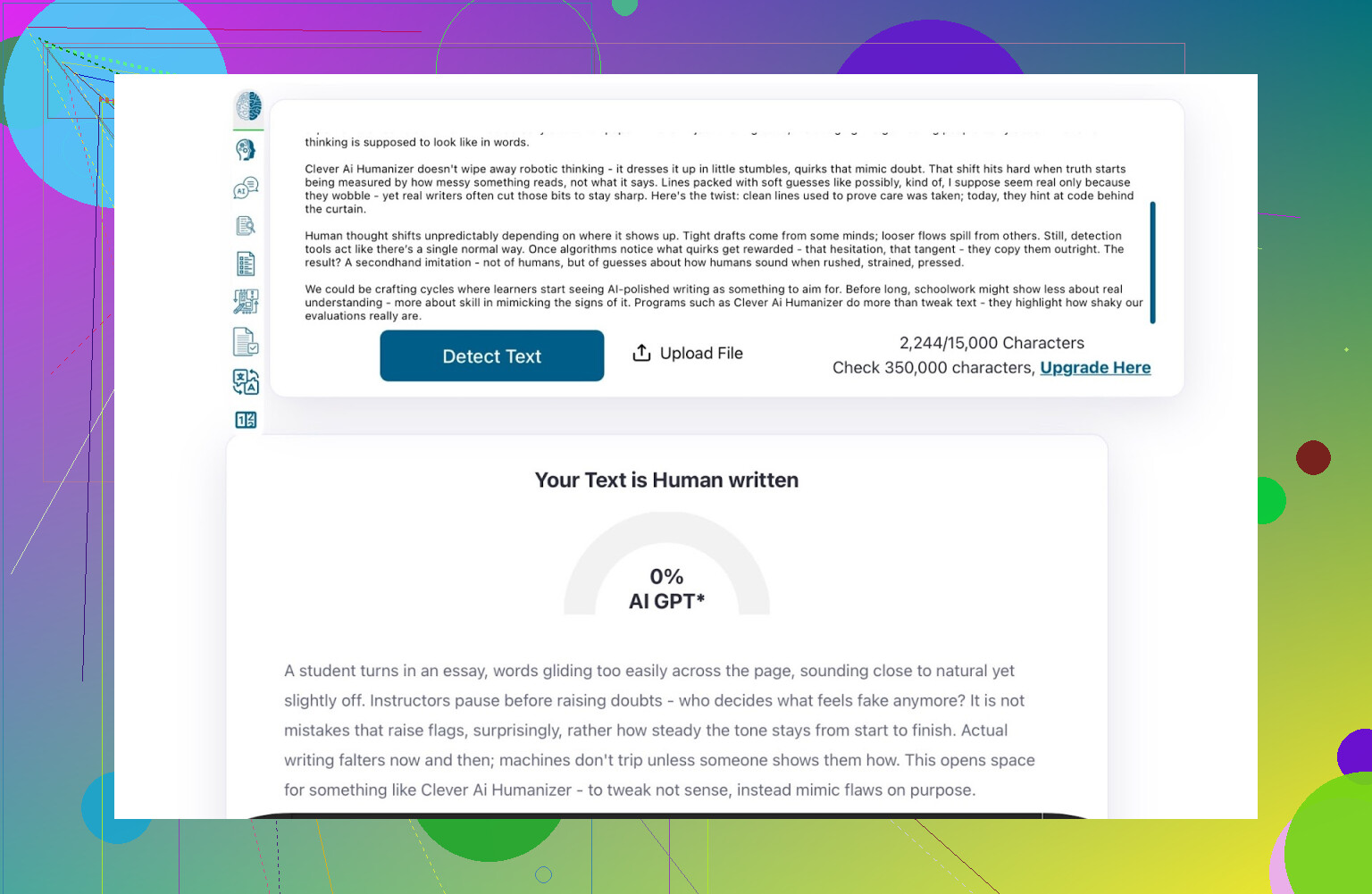

Detector check: ZeroGPT

First stop: ZeroGPT.

I don’t put a ton of blind trust into this tool, to be honest. It literally marked the U.S. Constitution as “100% AI,” which is… wild. But it’s still one of the most searched detectors, and a lot of teachers and clients use it, so it matters.

Result for the Clever AI Humanizer output:

- ZeroGPT: 0% AI

ZeroGPT called it fully human.

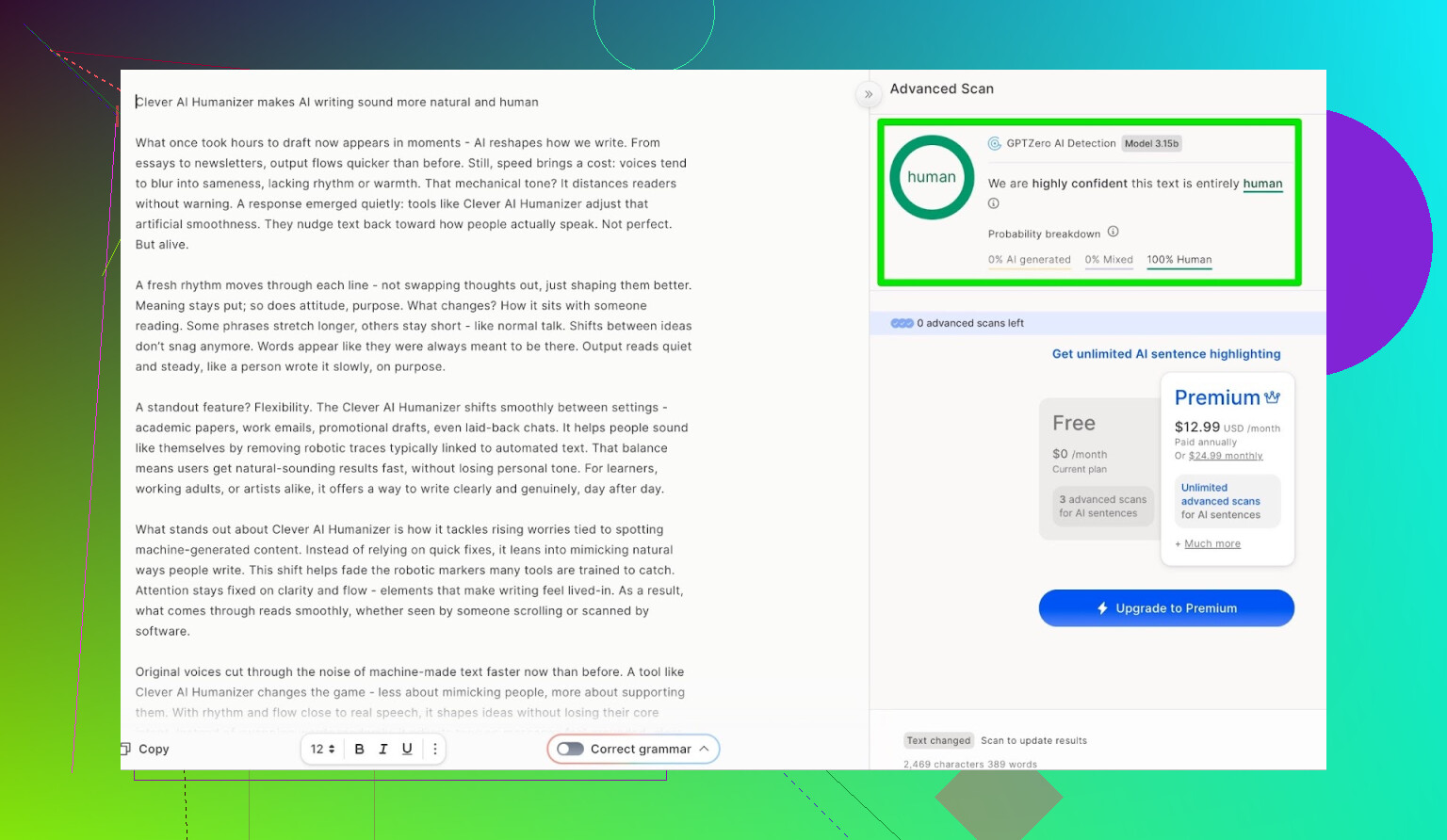

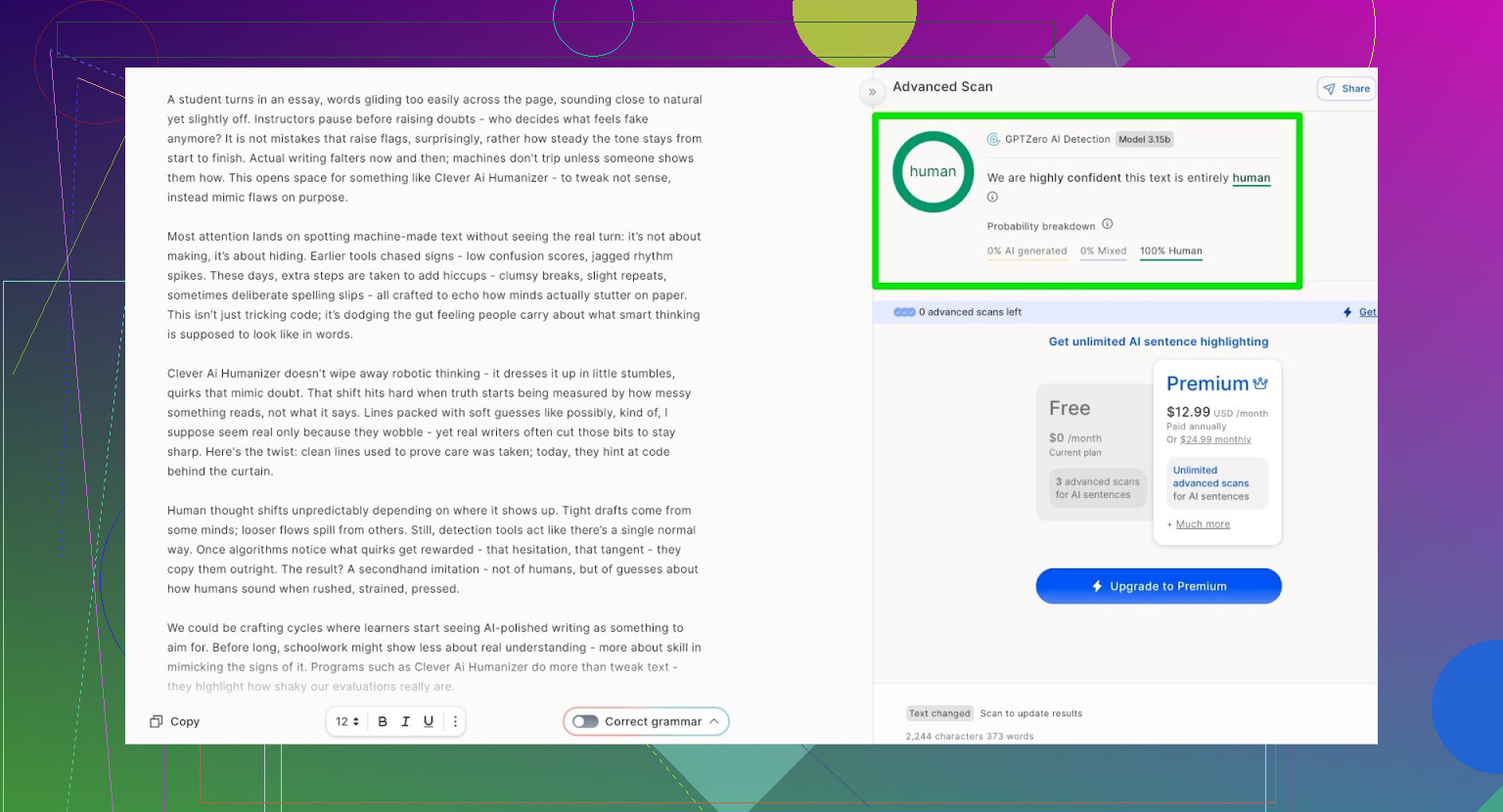

Detector check: GPTZero

Next up: GPTZero, another widely used checker.

Result:

- GPTZero: 100% human, 0% AI

So the same story: it passed as human-written.

That looks great on paper, but detectors are only half the picture.

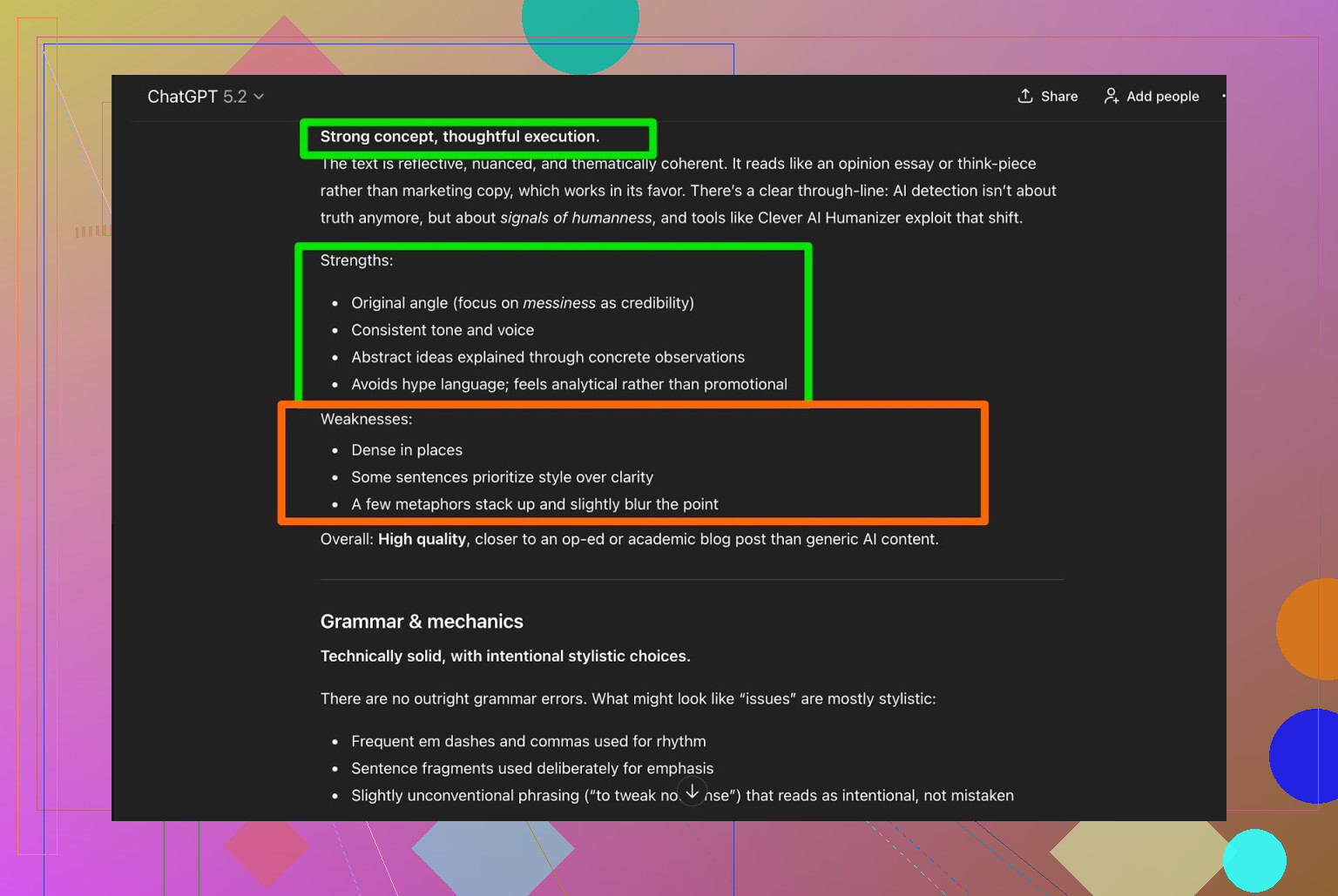

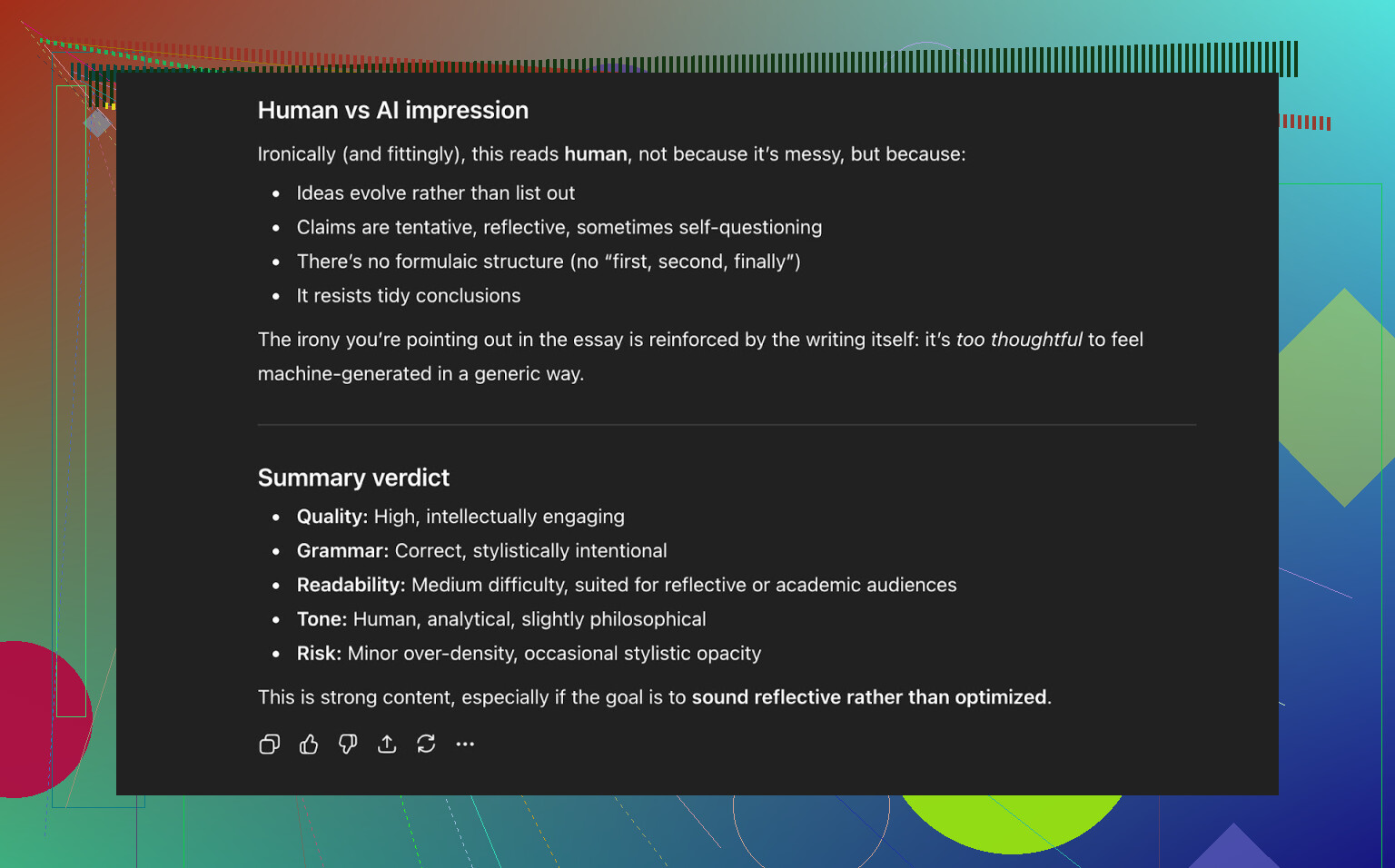

Quality check with ChatGPT 5.2

Getting past detectors is one thing. Not turning the text into garbled, weird-sounding nonsense is another.

A lot of humanizers “work” in the sense that they confuse detectors, but the end result reads like someone stitched together five different Wikipedia articles.

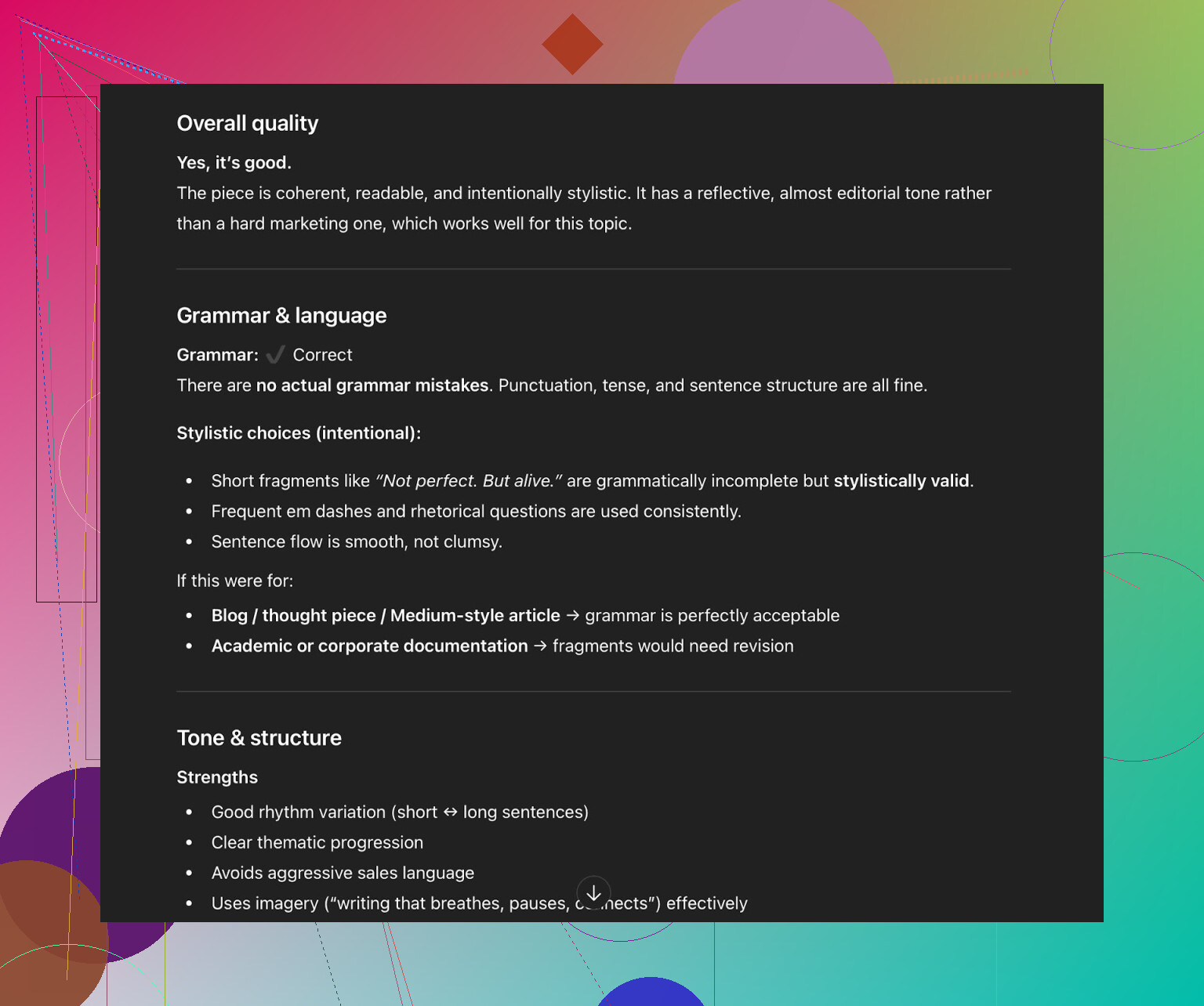

So I fed the Clever AI Humanizer output back into ChatGPT 5.2 and asked it to:

- Evaluate grammar

- Comment on flow and clarity

- Say whether it still looked AI-generated or not

What it said, summarized:

- Grammar: solid

- Style: fits “Simple Academic” decently

- Still suggested that a human should revise it

And I actually agree with that last point.

Human editing after any AI humanizer or paraphraser is basically non-negotiable if the text matters (school, clients, job applications, etc.). Tools can get you close, but they don’t replace a final pass by a real person.

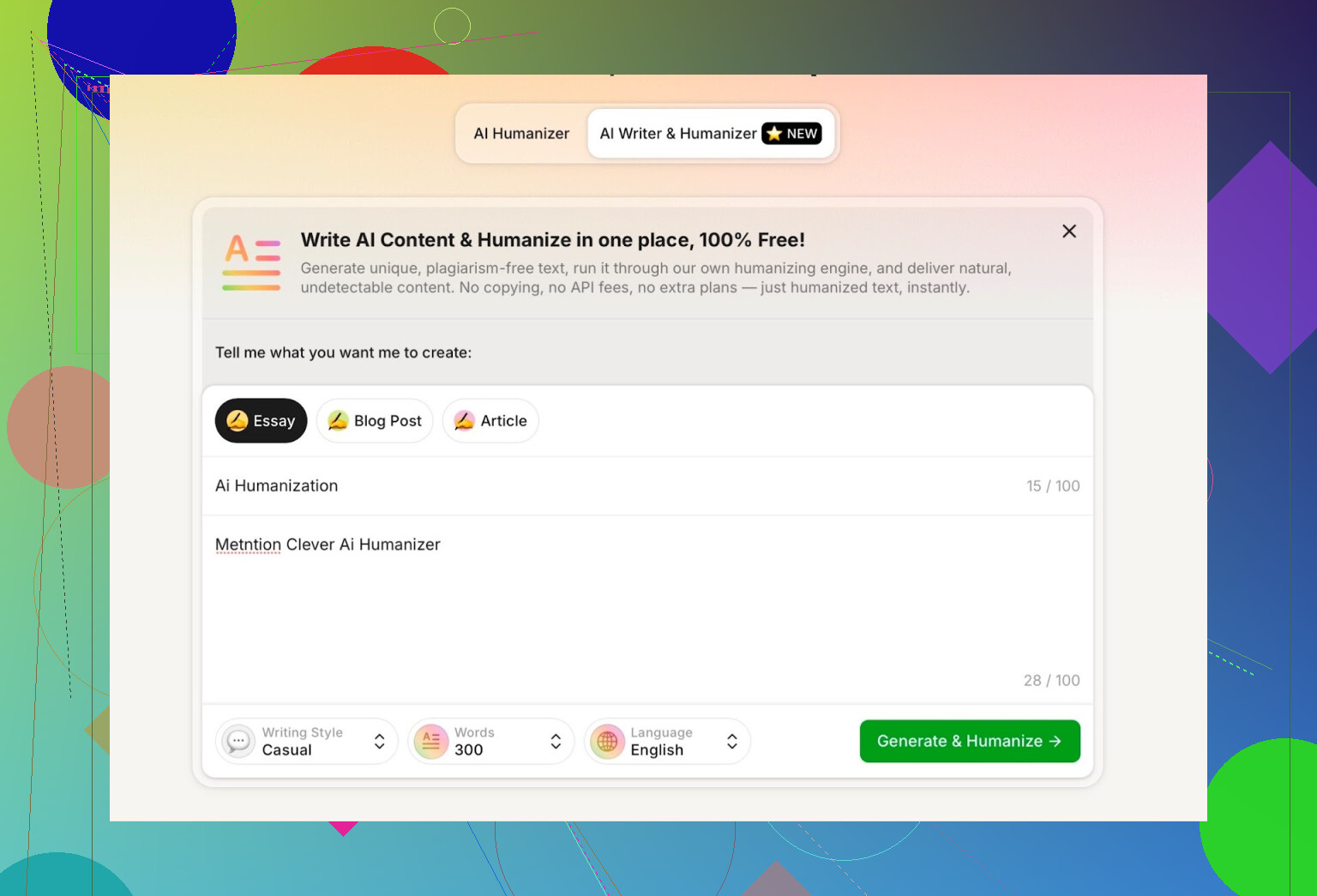

Trying their built‑in AI Writer

They’ve added a feature called AI Writer:

What it does is pretty straightforward:

- You enter a prompt, choose style and content type

- The tool writes and humanizes in one go

- No need to copy from another LLM like ChatGPT first

That alone makes it stand out, because most “humanizers” just rewrite text you paste in. They don’t generate it themselves.

The obvious advantage here is control: if the system generates the content and humanizes it at the same time, it can shape structure and phrasing so it looks less like typical LLM output, which should help with detection.

For my test, I did this:

- Style: Casual

- Topic: AI humanization, with a mention of Clever AI Humanizer

- I intentionally put a mistake in the prompt to see how it dealt with it

One thing I wasn’t thrilled about: I asked for a specific length (e.g., around 300 words), and it overshot.

If I request 300 words, I like getting something close to 300, not significantly more. Not a dealbreaker, but it’s the first real negative I ran into.

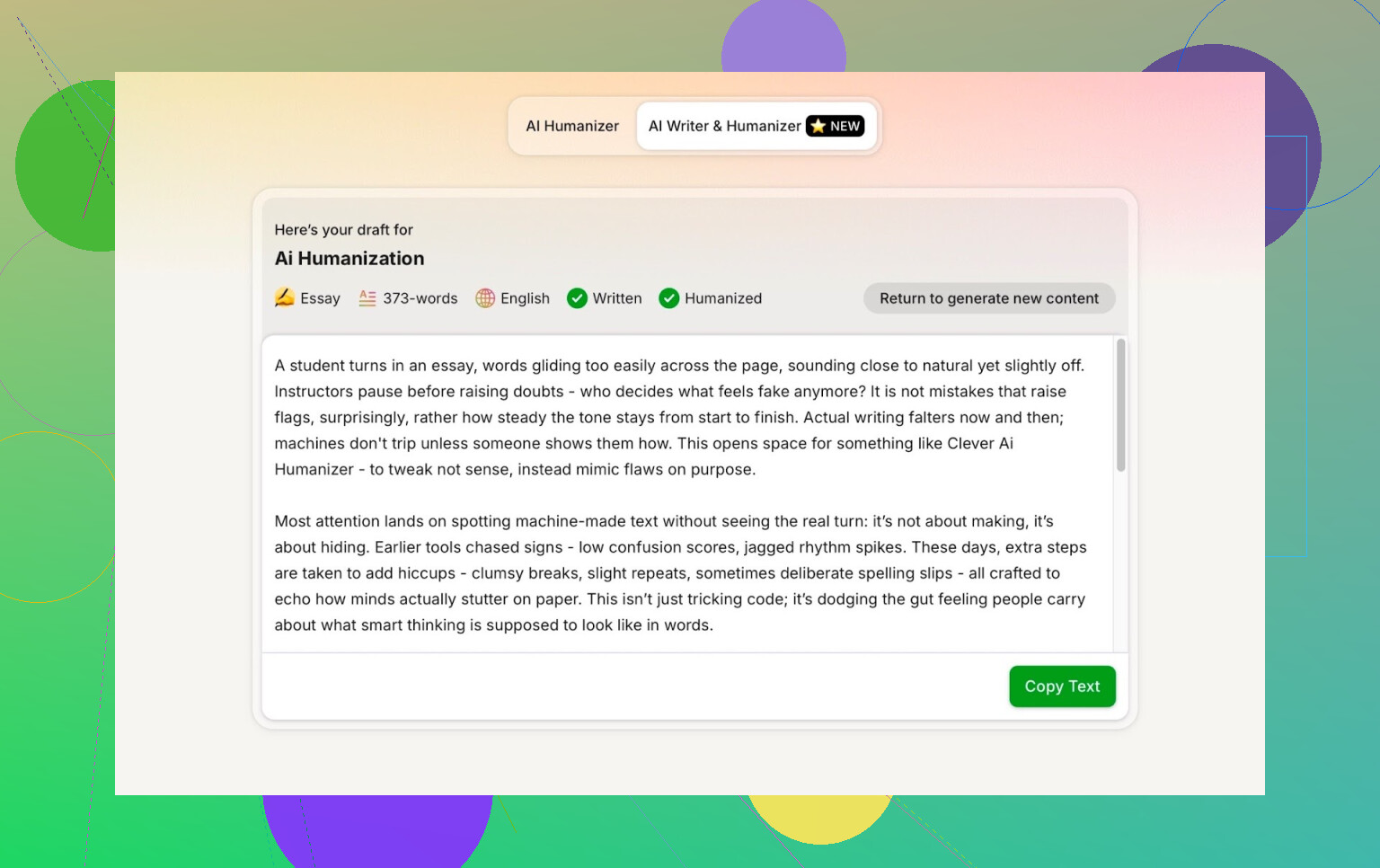

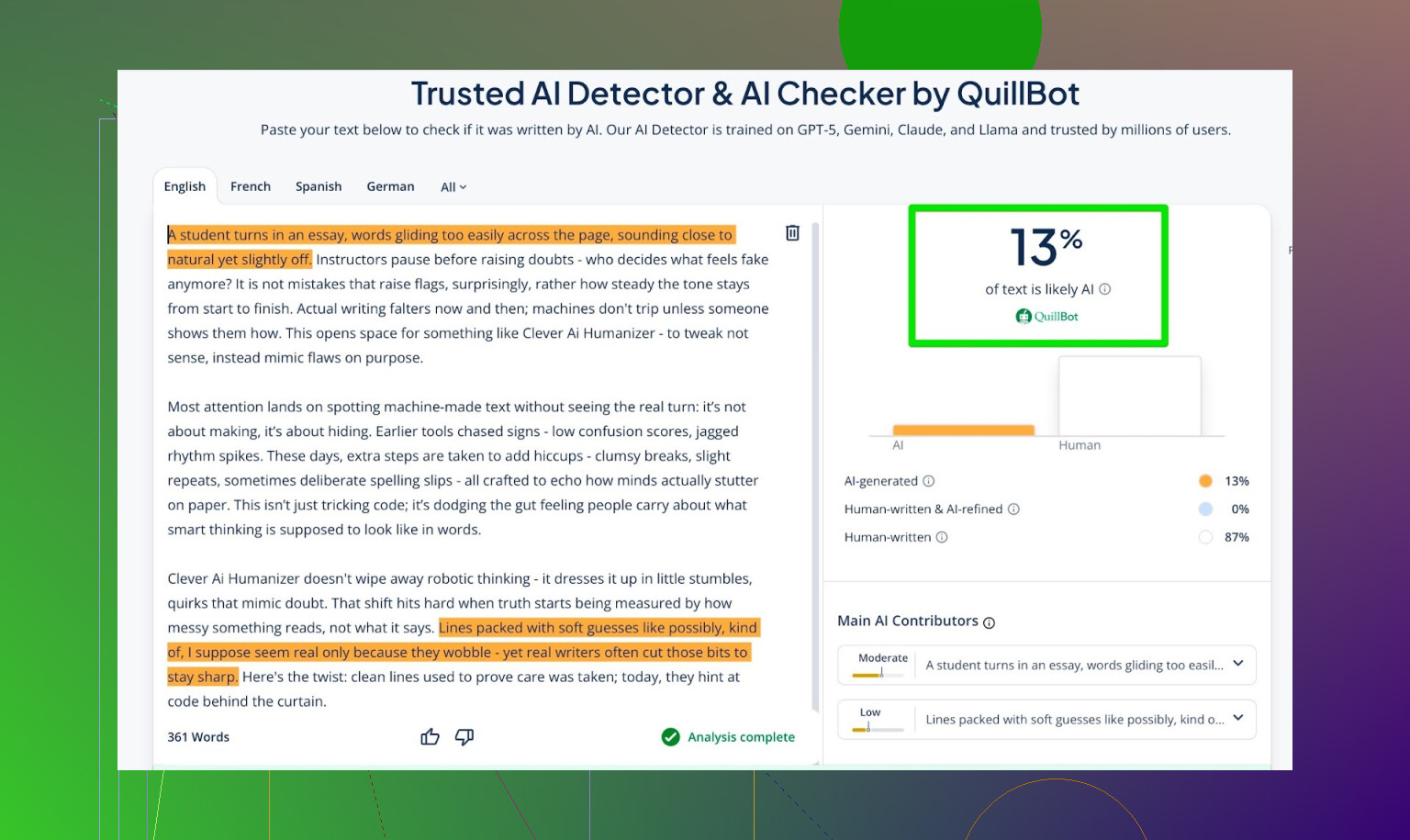

Running AI detection on the AI Writer output

Same routine: I took what the AI Writer produced and ran it through multiple detectors.

Results:

- GPTZero: 0% AI

- ZeroGPT: 0% AI, flagged as 100% human

- QuillBot detector: 13% AI

Given how aggressive some detectors are right now, those numbers are honestly pretty solid.

Quality of the AI Writer output

I pushed the AI Writer result through ChatGPT 5.2 as well to get a second opinion on:

- Coherence

- Grammar

- “Humanness”

Response boiled down to:

- Reads like a human wrote it

- Structure and flow are natural

- No obvious red flags in phrasing

So in this test round, Clever AI Humanizer:

- Passed ZeroGPT, GPTZero, and QuillBot with favorable scores

- Also convinced ChatGPT 5.2 that the text was human-written

For a free tool, that is rare.

Comparison with other humanizers

In my own tests, I put it up against several competitors, both free and paid.

Tools I tested it against included:

- Grammarly AI Humanizer

- UnAIMyText

- Ahrefs AI Humanizer

- Humanizer AI Pro

- Walter Writes AI

- StealthGPT

- Undetectable AI

- WriteHuman AI

- BypassGPT

Here’s how the tools stacked up in terms of AI detector scores in my runs:

| Tool | Free | AI detector score |

| ⭐ Clever AI Humanizer | Yes | 6% |

| Grammarly AI Humanizer | Yes | 88% |

| UnAIMyText | Yes | 84% |

| Ahrefs AI Humanizer | Yes | 90% |

| Humanizer AI Pro | Limited | 79% |

| Walter Writes AI | No | 18% |

| StealthGPT | No | 14% |

| Undetectable AI | No | 11% |

| WriteHuman AI | No | 16% |

| BypassGPT | Limited | 22% |

From what I’ve seen so far, if we’re talking free tools only, Clever AI Humanizer sits at the top of the list.

Where it falls short

It’s not some flawless magic fix. Issues I noticed:

- It doesn’t always respect exact word counts.

- There are still some detectable patterns in certain outputs.

- A few LLMs can still flag parts of the text as AI, depending on prompt and length.

- It sometimes drifts from the original structure/content more than you might want.

That last point is probably part of why it scores well: the more it rewrites, the less it looks like common AI output.

As far as grammar:

- I’d put it around 8–9 out of 10

- Multiple grammar tools and LLMs gave similar impressions

- It reads smoothly and doesn’t feel like broken English

One thing I appreciated: it doesn’t do the cheesy “fake human” trick where tools start adding random typos like “i had to do it” everywhere just to trip detectors. Yes, deliberate errors can sometimes help you sneak past detection, but then your text looks sloppy.

So you’re not getting intentional misspellings as a feature, which I personally consider a plus.

The weird “AI feel” problem

Even when detectors show 0% AI across the board, there are times when the text still has that subtle “AI rhythm” to it.

Hard to describe, but if you read AI content all day, you start to notice patterns:

- Repetitive sentence structures

- Overly balanced paragraphs

- Slightly bland transitions

Clever AI Humanizer does a better job than most free tools at breaking those patterns, but it doesn’t erase them 100%. No tool does, as of now.

That’s just where this whole space is: constant back-and-forth between detection tools and humanizers, like a permanent cat-and-mouse loop.

Bottom line

If you are looking at free AI humanizers, Clever AI Humanizer is:

- One of the strongest I’ve used for detection evasion

- Surprisingly good on readability and grammar

- Way better than most of the “slap a new logo on it and call it a humanizer” tools

Is it perfect? No.

- You still need to edit by hand.

- It can overshoot length.

- Some outputs still have that faint AI “aftertaste.”

But in terms of cost-benefit, it’s hard to complain when the tool itself doesn’t charge anything.

Use it as a starting point, not the final product.

Extra resources & Reddit threads

If you want to dive deeper into other AI humanizers (with detection screenshots and comparisons), there’s a useful Reddit post here:

There’s also a Reddit-specific discussion focused on Clever AI Humanizer itself:

Yeah, “mixed” is exactly how I’d describe Clever AI Humanizer too.

For me it’s really sensitive to input quality + style choice. When I feed it super generic AI text and pick something like “Simple Academic,” it usually comes out smoother and more natural. But when the source text already has a distinct voice (like casual, ranty, or heavily opinionated), Clever sometimes sandpapers everything into this same mid‑formal, slightly bland tone. That’s when it still feels AI-ish even if detectors like it.

Couple of specific UX / usage points that might explain what you’re seeing:

-

It’s great at structure, weaker at voice

- It’s pretty good at fixing stiff phrasing and making paragraphs flow.

- It’s not great at preserving a strong, unique style. If you start with spicy, you often end up with mildly seasoned oatmeal.

- That’s probably why some outputs feel “off” even when technically improved.

-

Detectors vs vibes

- Just because it passes GPTZero or ZeroGPT doesn’t mean a human won’t clock it as AI-ish.

- I’ve had texts that scored “0% AI” but still had that repetitive sentence rhythm and very “balanced” paragraph structure.

- So if you’re writing for a real human audience, detector scores alone are a bad compass.

-

You kinda have to “prompt engineer” your edits

- Short, generic inputs → more chance it rewrites too aggressively and drifts in meaning.

- Longer, more detailed inputs with clear structure → it behaves better and keeps the logic intact.

- I’ve started using it mostly on sections (2–4 paragraphs) instead of full articles. That gives me more control over tone.

-

Post‑edit is not optional

- I never use Clever AI Humanizer output as‑is for anything serious.

- My workflow now:

- Generate with preferred LLM

- Run through Clever AI Humanizer in a chosen style

- Do a fast but intentional human pass:

- Swap a few transitions

- Add a couple of personal asides or specific details

- Remove obvious “AI sentences” like “In conclusion,” or “Overall, it is important to note…”

- That last 5–10 minutes of manual editing is where it finally stops sounding “off.”

-

Where I disagree slightly with @mikeappsreviewer

- They’re right that it’s strong for a free tool and detector-wise it’s solid.

- But I don’t think it’s as consistently “natural” as their screenshots suggest. On topics with any nuance or strong opinion, I find Clever often over-flattens the voice.

- Also, in my runs it sometimes keeps subtle AI patterns like “However, …” every third sentence. Detectors may not care, but humans who read a lot of AI text do.

-

Where it actually shines

- Cleaning up bland, obviously AI-written informational content (how-tos, explainers).

- First-pass “humanization” for stuff you plan to touch up anyway.

- Avoiding the silly trick some tools use of injecting typos to fool detectors. At least here the grammar stays mostly clean.

-

Where it struggles

- Strong personality pieces (rants, humor, storytelling).

- Very strict requirements like “exactly 300 words” or very rigid structure.

- Matching a specific human’s previously established style.

If your goal is: “look less like raw AI and get past basic detectors,” Clever AI Humanizer is honestly one of the better free options and worth keeping in the toolkit.

If your goal is: “sound like a specific human with a recognizably unique voice,” no humanizer is there yet. Clever helps you get to a decent draft, but you still need to go in and mess it up in a human way: inject small tangents, slightly uneven sentence lengths, concrete personal details, and even a couple of minor stylistic quirks or tyops like this.

So yeah, your mixed results track with mine. I’d keep using Clever AI Humanizer, just mentally reframe it as a strong pre-edit tool, not a one-click “this is indistinguishable from a human” button.

Yeah, your “mixed” experience lines up with how these tools actually behave, and not just Clever AI Humanizer specifically.

Where I slightly disagree with both @mikeappsreviewer and @sonhadordobosque is this: I don’t think the main problem is just “input quality” or “use it only as a pre‑edit step.” The bigger issue is mismatch between what people expect and what these tools are actually designed to do.

Clever AI Humanizer is basically optimized for three things:

- Break common LLM patterns

- Smooth grammar and flow

- Confuse basic AI detectors enough to not scream “100% AI”

It is not optimized for:

- Preserving your exact voice

- Being logically tight on complex arguments

- Matching a very specific tone every single time

So when you say “sometimes it improves flow and tone, other times it feels off,” that’s actually the tradeoff in action. The more aggressively it disrupts AI patterns, the more it risks:

- Flattening personality

- Slightly warping emphasis or nuance

- Making everything sound like the same “generic smart person on the internet”

Instead of changing tools, I’d change how you use Clever AI Humanizer:

-

Use it only for certain parts

Let it rewrite:- Factual explanations

- Background sections

- Transitional paragraphs

Keep:

- Hooks, intros, personal bits

- Strong opinions, stories, jokes

That alone reduces the “off” feeling a lot.

-

Pick styles based on destination, not vibe

If it’s going to a teacher, client, or corporate setting, “Simple Academic” or similar is fine.

For blogs or casual pieces, those modes can make it sound too stiff and “AI but in a suit.” Try its more relaxed styles and then manually punch up 2–3 sentences per paragraph. -

Expect to re-add “imperfections” yourself

Clever AI Humanizer is pretty clean. Maybe too clean. If you want it to feel human, you actually have to:- Insert oddly specific details

- Break a pattern with a short line here and there

- Let one or two sentences run a bit long or tangled

That human messiness is something no humanizer is brave enough to add for you.

-

Don’t chase 0% on every detector

If the piece reads fine and a detector still shows some AI probability, that might be good. Perfect 0% across every tool often correlates with text that has been over-massaged and feels weird to actual humans. I’d accept “low to moderate AI score + very human vibe” over “0% AI + uncanny smoothness” every time.

Compared with what @mikeappsreviewer and @sonhadordobosque described, I’d say: yes, Clever AI Humanizer is one of the better free options, and yes, it’s solid for cleaning up obvious AI artifacts. But if you try to use it as a one-click “make this indistinguishable from human” button, it will keep disappointing you.

Used as:

- a structure & detection helper,

- followed by a short, deliberate human pass,

Clever AI Humanizer is absolutely worth keeping in your stack. Just don’t hand it your entire voice and expect it to give it back untouched.

You’re not imagining the inconsistency. Tools like Clever AI Humanizer sit in a weird middle ground: good enough to help, not good enough to trust blindly.

Quick breakdown from a UX / outcomes angle rather than more tests:

Where Clever AI Humanizer shines

Pros

- Very low friction: paste → pick style → done. No paywall, no account, which already beats half the field.

- Output is usually cleaner and less “LLM template-y” than what you’d get straight from a normal model, especially in Simple Academic or Casual.

- Plays relatively nicely with mainstream detectors, which is why people like @mikeappsreviewer rate it highly in comparisons.

- It does not trash the grammar just to look “human,” unlike some tools that introduce fake mistakes.

Where it bites you

Cons

- Tone drift is real. You feel it as “off” because it tends to normalize everything into one default narrative voice. Personal quirks vanish.

- It sometimes rearranges logic or emphasis. For more nuanced content, this is worse than getting caught by a detector.

- Style controls are shallow. @sonhadordobosque and @shizuka hinted at using it as a preprocessing step, but the deeper issue is: the style labels don’t reliably match what you have in your head.

- No meaningful control over how much it rewrites. Sometimes you just want 30% “de‑AI‑ification,” not a near-total refactor.

Instead of repeating the “use it, then manually edit” advice, here’s a different angle that might stabilize your experience:

-

Lock your voice first, then humanize only the “boring” regions

Write or generate your piece normally. Mark paragraphs that are:- Pure explanation

- Definitions

- Background / context

Run only those through Clever AI Humanizer. Leave your intro, conclusions, anecdotes and jokes untouched. This limits the tonal whiplash.

-

Use it for shape, not polish

Slight disagreement with @mikeappsreviewer here: I would not rely on it as your main flow improver. Let your main model (or yourself) do the stylistic pass, then use Clever AI Humanizer primarily to break obvious AI traces in sentence structure. After that, re‑inject 2 or 3 of your original sentences per section to restore voice. -

Create a “house style” template outside the tool

Since its style system is blunt, keep a short checklist next to you:- Average sentence length you want

- How often to use first person

- Whether you allow rhetorical questions, slang, etc.

After humanizing, do a fast scan against that checklist. This makes the UX feel more predictable because you are the consistent style layer, not the tool.

-

Pair it with contrast editing, not just proofreading

Instead of only fixing typos afterward, deliberately:- Shorten one long sentence per paragraph

- Add one very concrete detail (time, place, number, personal cue) per section

- Remove one generic phrase like “in today’s digital landscape” each time you see it

That tiny contrast is what breaks the remaining AI “rhythm” people talk about.

-

Match tool to risk level

- Low‑risk stuff (blog drafts, internal docs): Clever AI Humanizer is fine as a quick pass and its detector performance is a bonus.

- High‑risk stuff (academic work, legal, sensitive client material): use it only as a suggestion engine. You can copy its better phrasings, but do not hand over structural control.

On the competitor side:

- Approaches like the one @sonhadordobosque described lean more on manual re‑authoring, which is safer but slower.

- @shizuka’s angle of treating humanizers as “pre‑edit filters” is solid, though I’d argue Clever AI Humanizer is more useful as a pattern breaker than a typical paraphraser.

- The kind of systematic testing @mikeappsreviewer did is valuable, but in day‑to‑day use, your own “does this still sound like me?” check is more important than a 0% reading anywhere.

If you keep Clever AI Humanizer in your stack as a targeted helper instead of a universal fixer, the UX stops feeling random and starts feeling like a specialized wrench: great for some bolts, terrible for others.