I’m having trouble sending large files through FTP because the connection keeps dropping or timing out before the transfer completes. Does anyone know the best way to reliably upload or download large files with FTP? Any tips on setting or troubleshooting FTP clients for big transfers would help.

Figuring Out How to Move Big Files: FTP or Something Else?

Okay, throwing my two cents in here, ‘cause I’ve wrestled with the same headache: What’s the least painful way to move those gigantic files around, especially if you’re bouncing between office machines, home setups, or sharing them across your team? Here’s what I’ve poked at so far.

When You Want That Classic, No-Nonsense FTP Experience

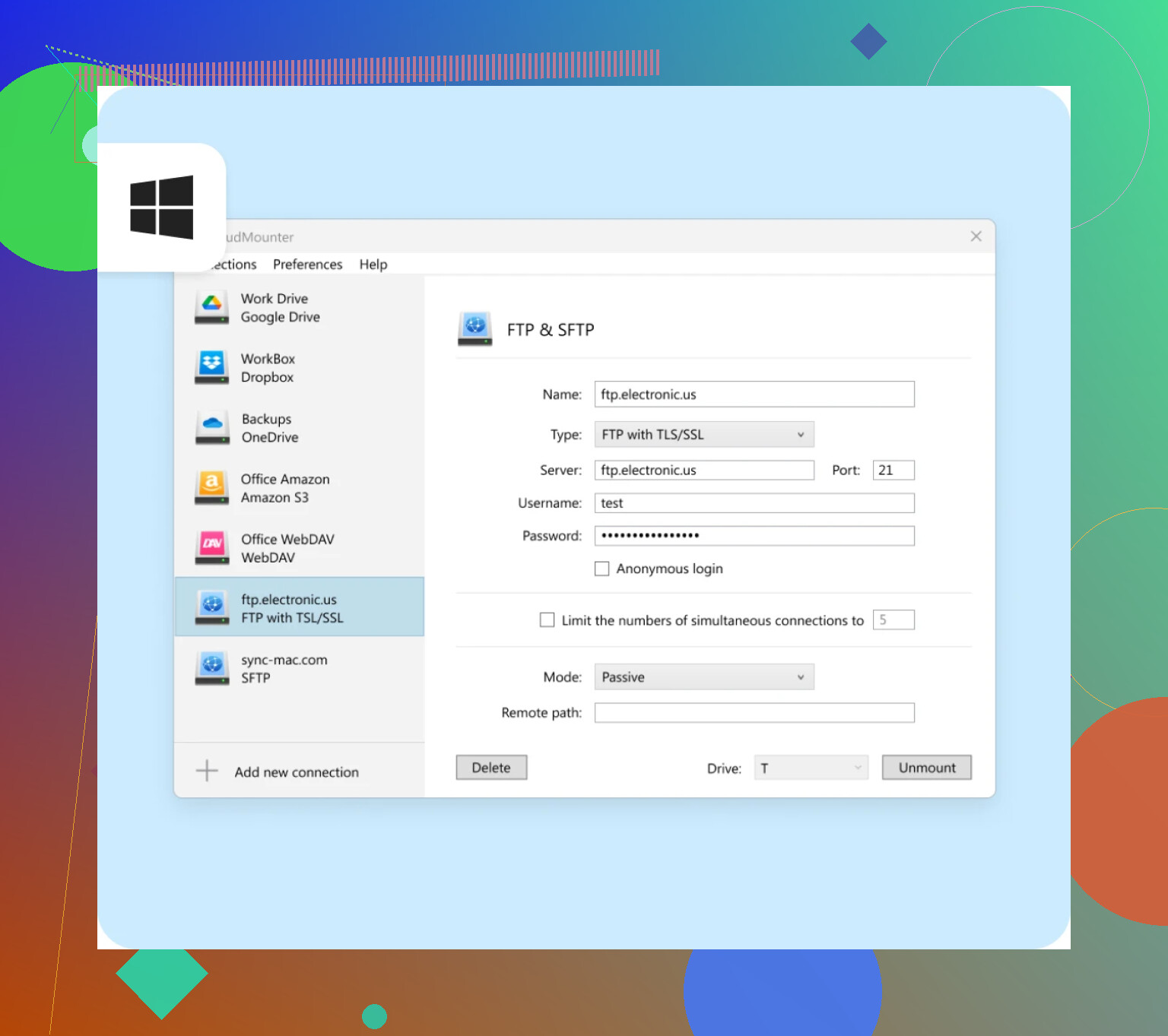

Honestly, dealing with old-school FTP clients sometimes feels like dialing a rotary phone in the age of TikTok. If you’re tired of the “open another app, chase down server details, pray for connection” dance, check this out: CloudMounter.

Picture this: Instead of firing up some retro utility that looks straight out of Windows 98, you mount your FTP (or SFTP) space as if it’s just another drive. No context switching, no side-eyeing weird, clunky interfaces. On Mac, you get Finder; on Windows, it’s plain old File Explorer. Drop your files like you’re moving them into a folder—except it all goes to your online storage.

Cool bonus? CloudMounter doesn’t just do FTP. It can pull in your cloud junk from Google Drive, Dropbox, and OneDrive, so you’re not managing three different apps for three different tasks. You dunk your files wherever they need to go and get on with your life.

Prefer Something Familiar? Just Use Google Drive Like a Big, Dumb Hard Drive

Let’s be real: most people just want to dump giant files somewhere and share a link, not mess with protocols nobody remembers how to configure. Enter Google Drive for desktop.

Here’s the deal: after you install it, your Drive shows up as a “real” drive on your computer. That’s right, a big ol’ G: drive (while Mac users get their own version). You copy-paste your monster-sized files straight into it, and the upload happens in the background. No FTP jargon, no weird firewall stuff, and zero chance your recipient has to think about setting up an FTP client—just click a link, download, done.

It doesn’t “feel” like FTP, but honestly? In 2024, who cares as long as the files get where they’re going.

So…Which Lane Should You Pick?

- If you need to connect to actual FTP servers (think: your company website, a client’s file drop, whatever), CloudMounter is about as straightforward as it gets.

- If your only goal is shoveling huge files from point A to B, and nobody needs to connect to an FTP server, Google Drive is so much less hassle. You get a local feel, cloud flexibility, and link-sharing built in—what’s not to like?

Just figure out what your real need is, then pick whichever solution gets you out of “file transfer hell” fastest. There’s enough junk to deal with out there—moving files shouldn’t be another source of pain.

Let’s just cut through the noise: FTP isn’t really designed for “I gotta move my entire life in one go” file transfers. It’s old, cranky, and sometimes just breaks when you pour a 20GB file through it. Seen it. Lived it. Yelled at my screen more than once. Mike’s CloudMounter sounds nice and all for mounting stuff, but I’m not totally on board with doing everything through virtual drives—it can turn your laptop into a space heater, and the Finder/File Explorer sometimes lags like it’s 1995 on a dial-up.

Here’s how I survive this FTP mess:

- Never use plain old FTP; use SFTP at least (way more stable, plus encryption so nobody’s snooping your files).

- Pick a client that supports resume! Seriously, classic FileZilla (free; ugly as sin) and WinSCP (also free, still ugly) will pick up where you left off when the connection dies. Moving big files? You need resume support.

- If possible, split your massive file into chunks (7-Zip and Keka are magic for this—just right-click, set to split into 500MB parts or whatever). Upload parts—if one chokes, you just start again from that part. Merge on the other end.

- On super-janky connections, throttle your upload speed to like 80% of your max bandwidth. FTP dies if you saturate your connection and your ISP hiccups.

- For the nuclear option: if you’re transferring files between places you control, just spin up an SFTP server on one box and rsync (yes, it works on Windows). Command-line isn’t pretty, but it NEVER gives up if set up right.

Honestly tho, why are we still using FTP in 2024 unless it’s the only way your boss/client/legacy system works? If so, do yourself a favor: automate it with scripts so you hit upload and walk away, or just push those stubborn folks to use cloud storage links. CloudMounter is great for mounting those protocols, but if your connection sucks, nothing will fix flaky FTP except for stability/resume features or chunking.

TL;DR: Use resume-capable clients, split files, consider SFTP, and push for cloud wherever possible. FTP is not your friend for big transfers—it’s the grumpy neighbor who keeps your ball when it lands in their yard.

Look, as much as @mikeappsreviewer went all in on chunking and resuming (which—truth—they do work), there are other angles to try, especially if you’re getting truly desperate with massive file transfers over FTP. First off, about using CloudMounter: actually mounting FTP/SFTP like a drive can save your sanity if you just need drag-and-drop in Finder or Explorer, but don’t expect miracles—if your line drops, it’s still up to the protocol under the hood.

But let’s throw a curveball: has anyone tried tweaking TCP buffer/window settings or using active mode vs passive mode? Depending on your network config, sometimes switching modes can dodge some NAT/router weirdness that kills long transfers.

Also, try NOT to use wifi for horrendous uploads. Ethernet is boring, but it’s your only friend for heavy lifting. WiFi timeouts love to kill 99% completed transfers—ask me how I know.

Here’s a weird trick that once worked for me: use a lightweight VPS as a go-between—upload to the VPS (ideally geographically closer to you), then SFTP/FTP from there to the destination. Half the failed transfers, less headbanging.

And, not to start a Cloud vs Local war, but if you’ve got access to a decent cloud drive and CloudMounter, sometimes it’s less stress to upload to, say, OneDrive or Google Drive with a reliable desktop sync client, then just share from there, especially if the end party doesn’t care how the file arrives. Mike’s right, legacy FTP-only workflows should probably be banished, but if you’re stuck…

Splitting files is solid, but I’ve had cases where even merging multi-gig parts on a busted remote machine caused drama. Sometimes, one huge SCP push (with resume) is less headache than 40 zip chunks.

Anyway—if FTP is mandatory: try a different client, try active/passive, tether your laptop, use cable not wifi, and if the upload fails at 99% one more time, just convince the other side to use CloudMounter or cloud storage. At some point, fighting FTP becomes a form of performance art.

The FTP struggle is real, but everyone here is focusing on chunking/zipping, resume-capable clients, and SFTP alternatives. All sensible—except, honestly, chunking gets old fast on unreliable endpoints, and sometimes even FileZilla can’t save you if your upload gets nuked by a flaky router right at 98%. So let’s throw some curveballs.

Have you thought about parallel transfers? Some FTP clients (think beyond FileZilla, like CuteFTP Pro or even lftp for CLI freaks) allow segmented transfers or simultaneous uploads of multi-part files. This sometimes gets around the notorious single-thread timeout issue because a failed thread only sets you back a chunk, not the whole night’s work.

Let’s also talk automation: scripting the process (PowerShell for Windows, bash+cron for *nix) means you can schedule your uploads and even re-attempt failed ones—way more reliable for multi-night monster dumps than standing watch for the “connection lost” pop-up.

Now, about CloudMounter: it absolutely makes FTP/SFTP/and cloud protocols feel native, which is slick for drag-and-drop. No contest on ease. Is it perfect? Not really—mounting as drives can chew up resources, and large transactional moves can slow your whole system to a crawl (as alluded to already). Still, for mixed-protocol workflows—like going from Dropbox to FTP—it’s way more streamlined than juggling 4 different apps.

Compared to direct-client diehards or CLI rsync masters (looking over at the competition here), CloudMounter’s biggest pro is “it just works” factor for anyone who’d rather not touch terminal windows. But cons: you’re limited by your system’s stability and CloudMounter won’t magically patch a broken FTP server.

Bottom line: split files if you must, but try segmented/parallel transfer if your client supports it; automate retries rather than nurse the connection yourself; mount with CloudMounter if the workflow needs to be painless (but bring some patience for big jobs). If this still blows up, it’s time to call your sysadmin—or finally convince your client to move to something past FTP’s Jurassic era.